How We’re Building QA That Thinks

At Quash, progress has rarely been linear. Each phase has been an experiment where some ideas clicked, others fell short, but every turn shaped the system we’re now preparing to ship.

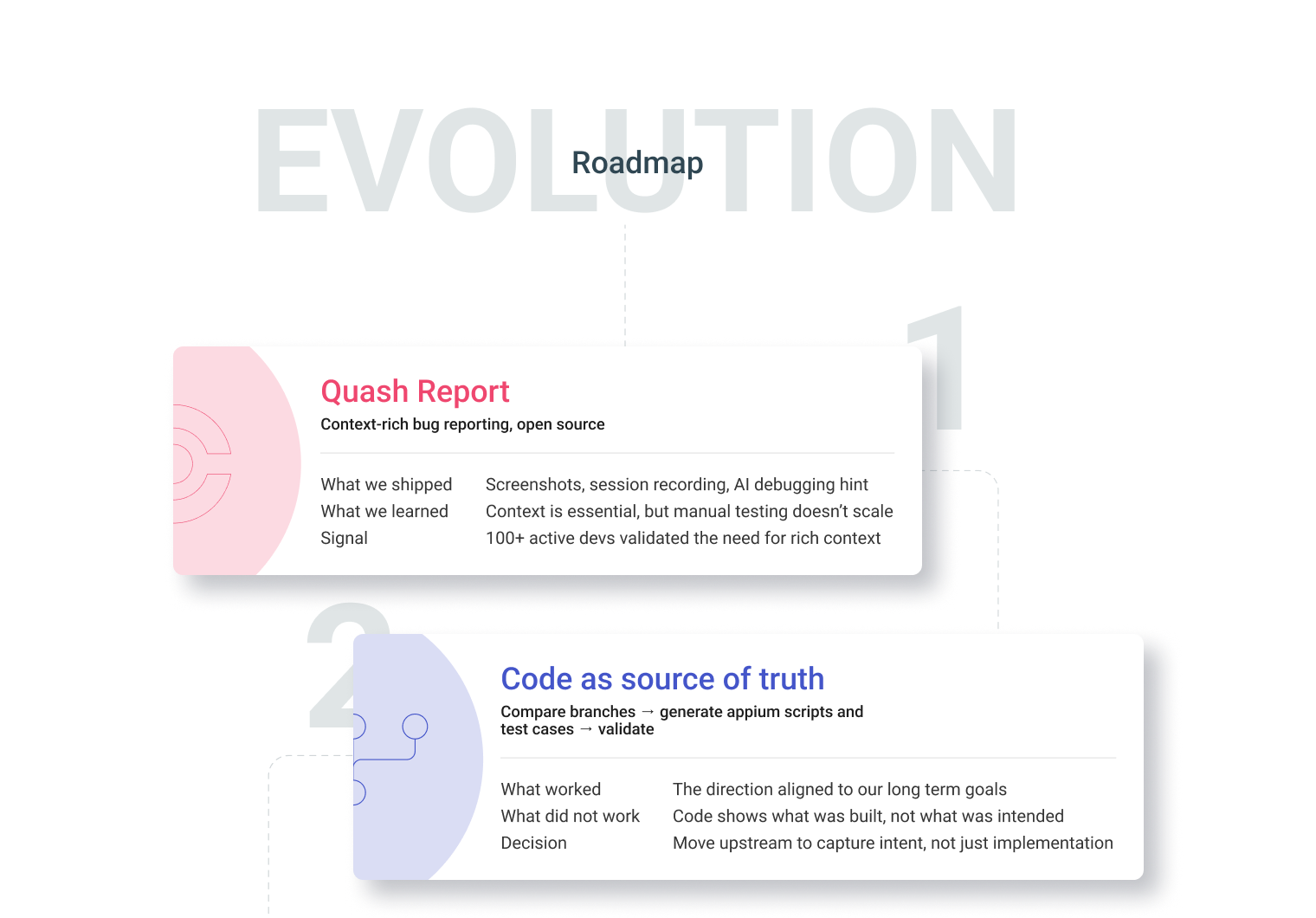

Starting with Bug Reporting

We started by tackling something small but essential: bug reporting. Our open-source SDK, Quash Report, let testers shake their device to capture logs, screenshots, and even AI debugging suggestions. Over a hundred developers used it, which told us we were on the right track. But it also exposed the limits of manual-first QA. Context mattered, but context alone wasn’t enough.

From Automation to Intent

The next phase pushed us toward automation. We assumed code could be the ultimate source of truth where you could just compare branches, generate test cases, validate workflows. That became Quash Automate. It worked well for a while, but we kept running into the same wall: code reflects what was built, not what was meant to be built. That gap in intent was too big to ignore.

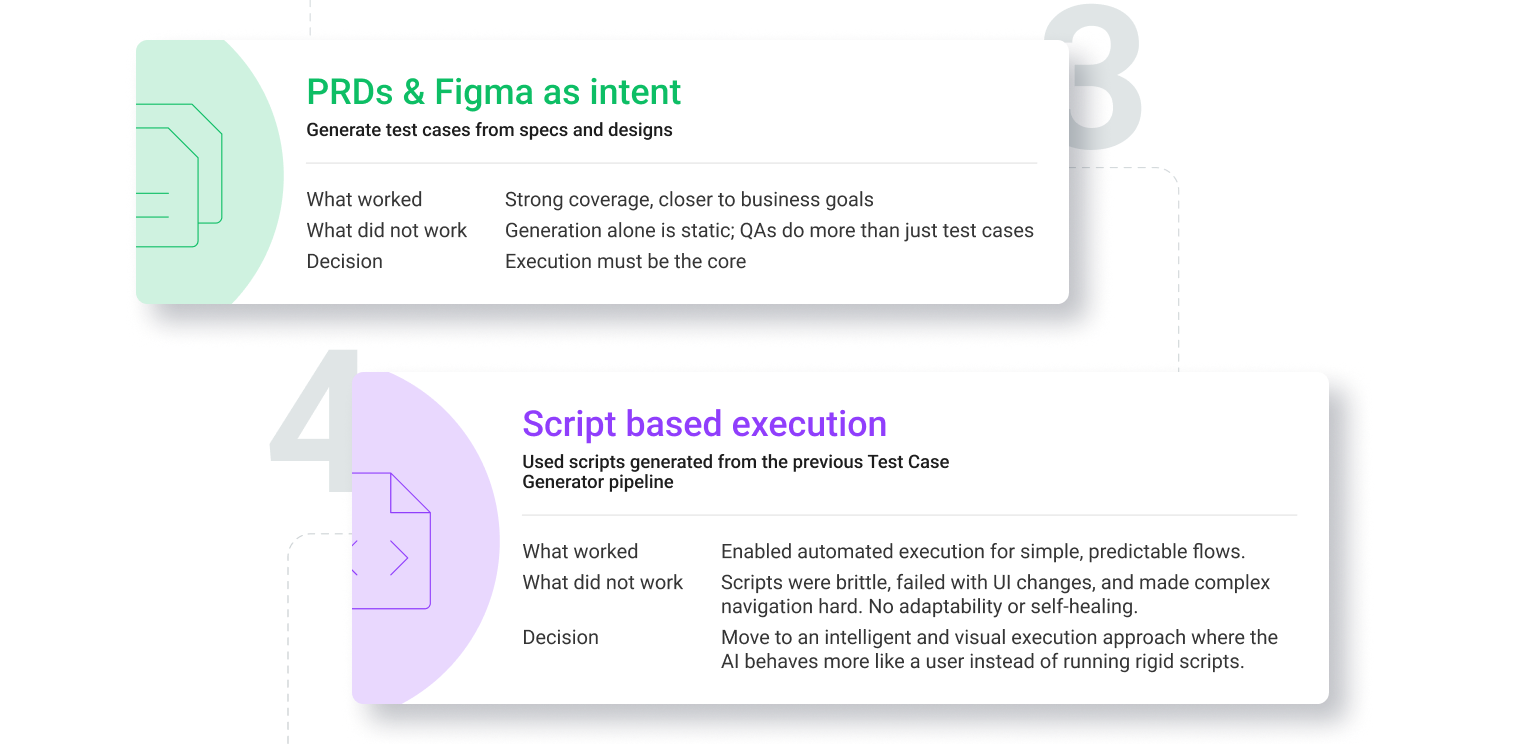

So we went upstream. Instead of code, we began with use cases similar to the way QAs think. A PRD-to-test-case generator was accidentally born, which we later refined with Figma integration. Suddenly, the results were strong enough to feel like a breakthrough. And when bigger players started copying the idea, it confirmed we were pushing in the right direction. Still, the bigger realization was this: generation alone doesn’t capture what QAs actually do. They needed to execute, adapt, and validate in real time.

Cracking Execution

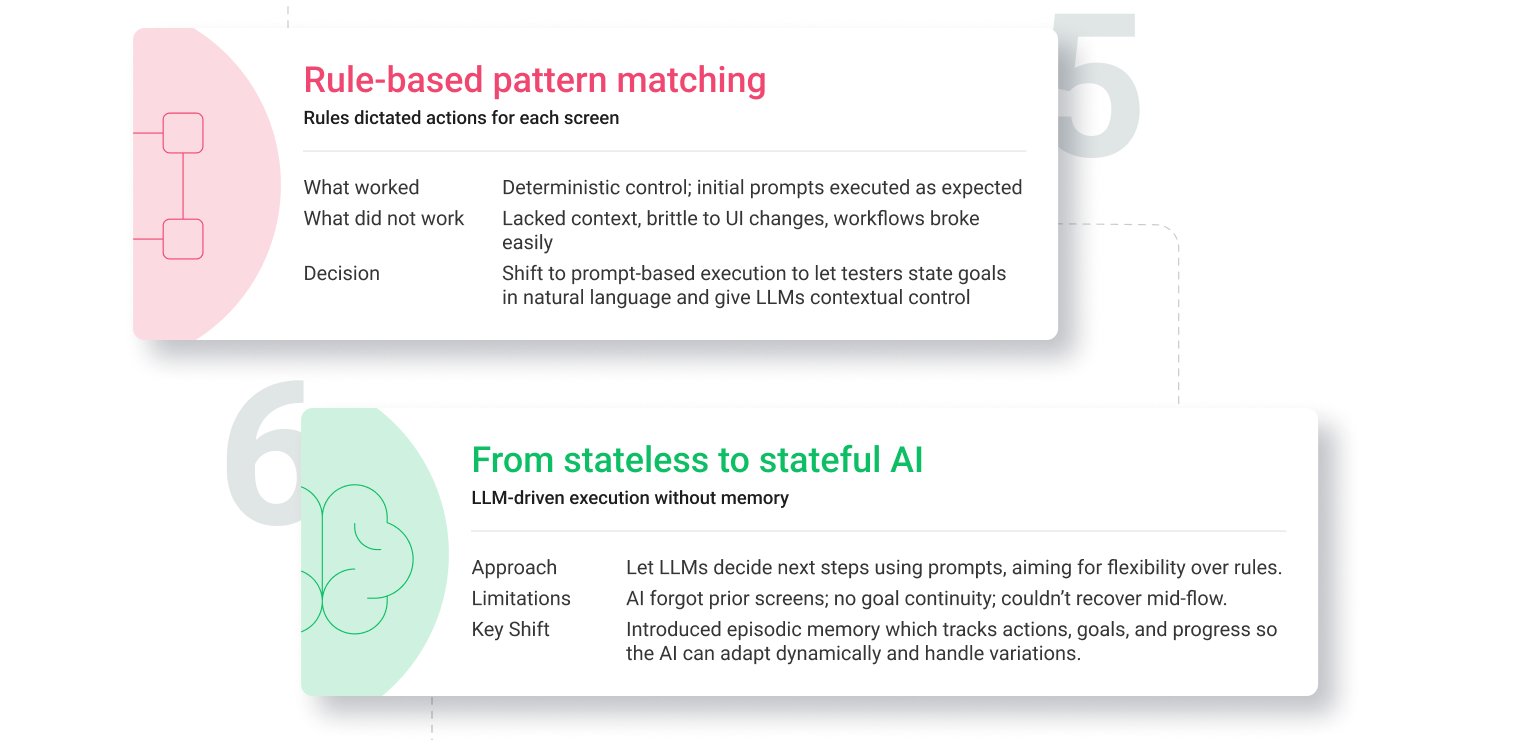

That shifted our focus to execution. Early attempts were fragile and broke as soon as the app shifted. We even tried rule based pattern matching, but that lacked context and workflows broke easily. So we shifted to a prompt based execution pipeline to let testers express intent clearly. This was promising at first but AI often forgot the last action, which meant no memory, no progress.

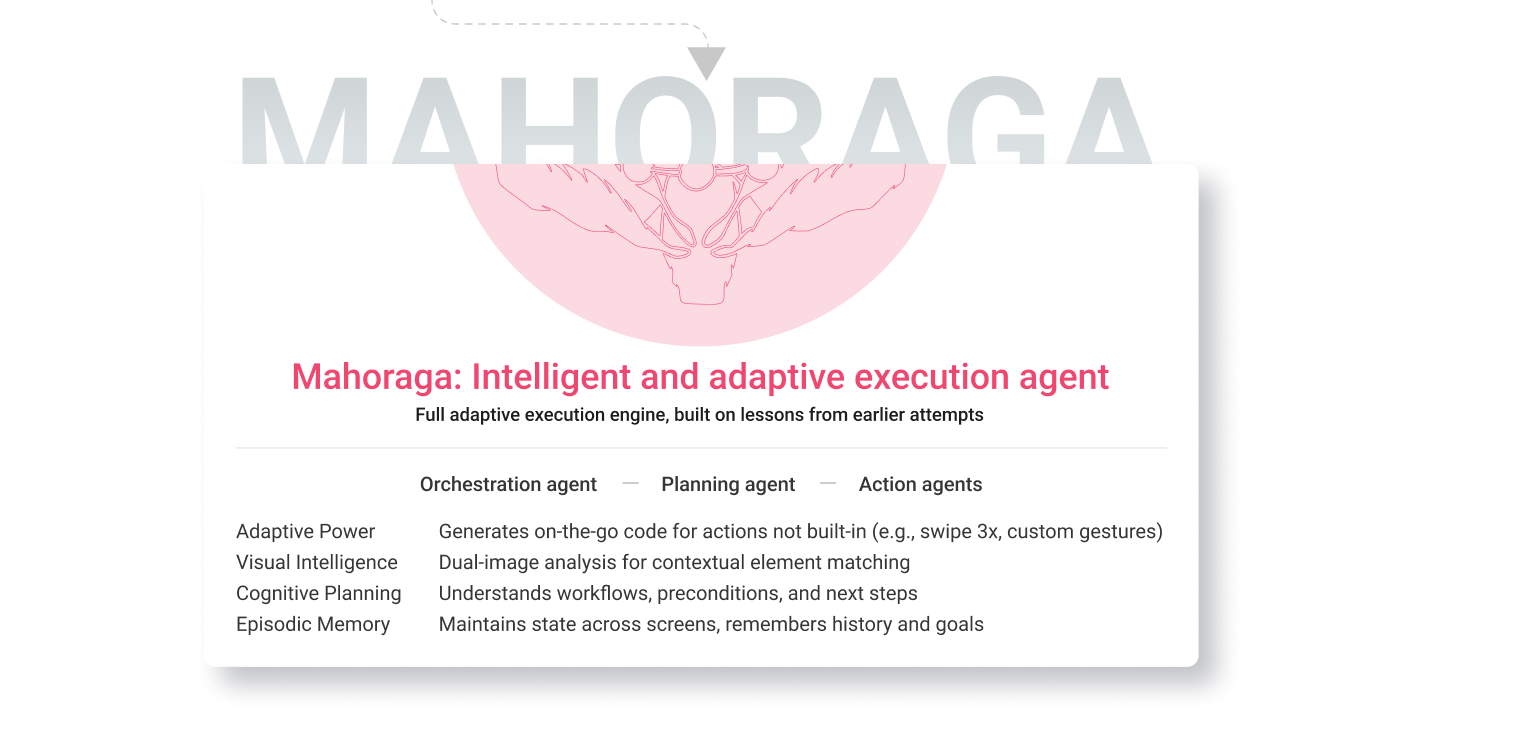

Everything changed when we built episodic memory into the system. Suddenly, the AI could carry goals forward, adapt mid-run, and move through flows with the presence of mind of a human tester. On top of that, we layered visual intelligence, cognitive planning, and multi-agent orchestration. What emerged was Mahoraga: our execution engine that doesn’t just follow instructions, but thinks, adapts, and decides.

One Cohesive System

Seeing it in action was one of those moments where the room goes quiet. It ran faster and more precisely than we’d ever seen, making decisions mid-flow like a real user. That clarity forced us to simplify everything around it. Two separate pipelines like generation and execution only added friction. So we folded them into one cohesive system: cleaner, faster, and better at signaling readiness for every release.

The Road Ahead

That’s where we are now. Not a finished story, but a system that’s beginning to match the way testers actually think. It’s taken years of iteration, missteps, and rethinking, but the direction is clear: QA that feels effortless, that moves at the speed of modern software, and that finally makes testing less of a bottleneck.

~Team quash

evolution illustrated

Get the Mobile Testing Playbook Used by 800+ QA Teams

Discover 50+ battle-tested strategies to catch critical bugs before production and ship 5-star apps faster.