Is AI in Mobile Testing Worth It? Pros, Cons & ROI

Billions of smartphones. Thousands of device models. Millions of apps.

Mobile app testing today isn’t just complex, it's relentless. You’re juggling device fragmentation, unpredictable networks, and the never-ending pressure to release faster without breaking anything. That’s where AI testing tools promise to step in, automating the grunt work, catching edge cases, and scaling test coverage in ways traditional methods cannot match.

But let’s cut through the hype: Is AI in mobile testing really worth the investment? This guide breaks down the pros, cons, and ROI so you can make the right decision for your team.

The Real-World Pain Points in Mobile Testing

You already know mobile testing is not like web testing. Between Android’s 19,000-plus devices and Apple’s many iOS versions, testing across that landscape is brutal. And it’s not just the hardware mobile QA has to account for multiple additional layers of complexity:

Network conditions: Testing on Wi-Fi, 3G, 4G, and 5G with inconsistent speeds

Device constraints: Memory, battery, and CPU performance all impact app behavior

User expectations: One bad experience, and nearly 17% of users never return

Add in shrinking release cycles, increasing competition, and user demands for seamless experiences, and you’ve got a recipe for bugs slipping through at an alarming pace.

Traditional testing tools are no longer sufficient. That’s why AI has become an essential evolution in modern QA.

Get the Mobile Testing Playbook Used by 800+ QA Teams

Discover 50+ battle-tested strategies to catch critical bugs before production and ship 5-star apps faster.

What AI Really Brings to Mobile QA

AI in testing is more than just automation. It is about making your automation smarter. AI adapts, learns, and improves over time. Already, almost half of QA teams (48%) are using AI in some capacity, with another 21% planning to adopt it within the next six months.

Here’s what AI brings to the table in real-world use cases:

Intelligent Test Case Generation

AI tools analyze real user behavior, app architecture, and historical bugs to create high-impact test cases automatically. For example, Quash’s Spec2Test, powered by GPT-4o, can convert your product specifications into complete Gherkin-style test scenarios in under 45 seconds.

Self-Healing Automation

Forget about scripts breaking every time the UI changes. AI-powered frameworks can detect minor changes and adjust themselves, reducing locator maintenance efforts by up to 38% and keeping your pipelines unblocked.

Predictive Defect Analytics

Machine learning algorithms can identify high-risk areas within your app based on previous releases and defect history. This allows QA teams to focus on areas that are most likely to fail and prevent production bugs proactively.

Visual Regression Testing at Scale

AI can detect even the smallest visual changes. It analyzes pixel differences and UI shifts across a broad device matrix, helping you catch layout bugs, misplaced buttons, or broken images that traditional automated tests often miss.

Where AI Delivers the Biggest Wins

Faster Testing, Faster Releases

AI drastically reduces the time needed to generate and execute test cases. QA teams report saving up to 40 hours per month, with test authoring time cut by over 90%. One mid-sized company saw savings of nearly $500,000 annually by switching to an AI-powered quality assurance workflow.

Better Coverage, Fewer Escapes

AI-driven testing delivers broad and deep test coverage without fatigue or oversight. Teams using AI typically experience:

80% faster test creation

40% better edge case detection

90% reduction in time spent logging and categorizing bugs

It is not just about speed it is also about test effectiveness and overall product quality.

Cost Reduction That Adds Up

Fewer bugs in production and fewer engineering hours spent fixing them means significant cost savings. Large enterprises report up to 30% reductions in QA budgets. Some have reduced their testing costs from over $2 million to just under $300,000 annually.

Remember, identifying a bug post-release can cost up to 15 times more than catching it during development.

Seamless CI/CD Integration

AI-powered tools integrate directly into your CI/CD workflows. Tests are triggered with every commit or push, and results are delivered in real-time. Parallel test execution across multiple device configurations ensures that no bottlenecks slow you down.

But AI Testing Isn’t a Silver Bullet

It Is Not Plug-and-Play

Many AI tools require setup, integration with your current toolset, and customization. The learning curve can be steep, and onboarding might require dedicated resources or external consultants.

It Requires Quality Data

AI relies on past data to train its models. If your project is new or lacks meaningful testing data, the AI may underperform initially. The more quality input it receives, the smarter it becomes over time.

It Cannot Replace Human Intuition

AI lacks contextual understanding. It may miss bugs related to user experience, business logic, or emotional design. Manual testing remains essential for exploratory testing, usability evaluation, and customer-centric insights.

Upfront Costs Can Be High

Some enterprise AI testing platforms begin at $60,000 or more annually. Combined with implementation, training, and infrastructure, the initial investment can be considerable. For smaller teams, this may not be immediately feasible.

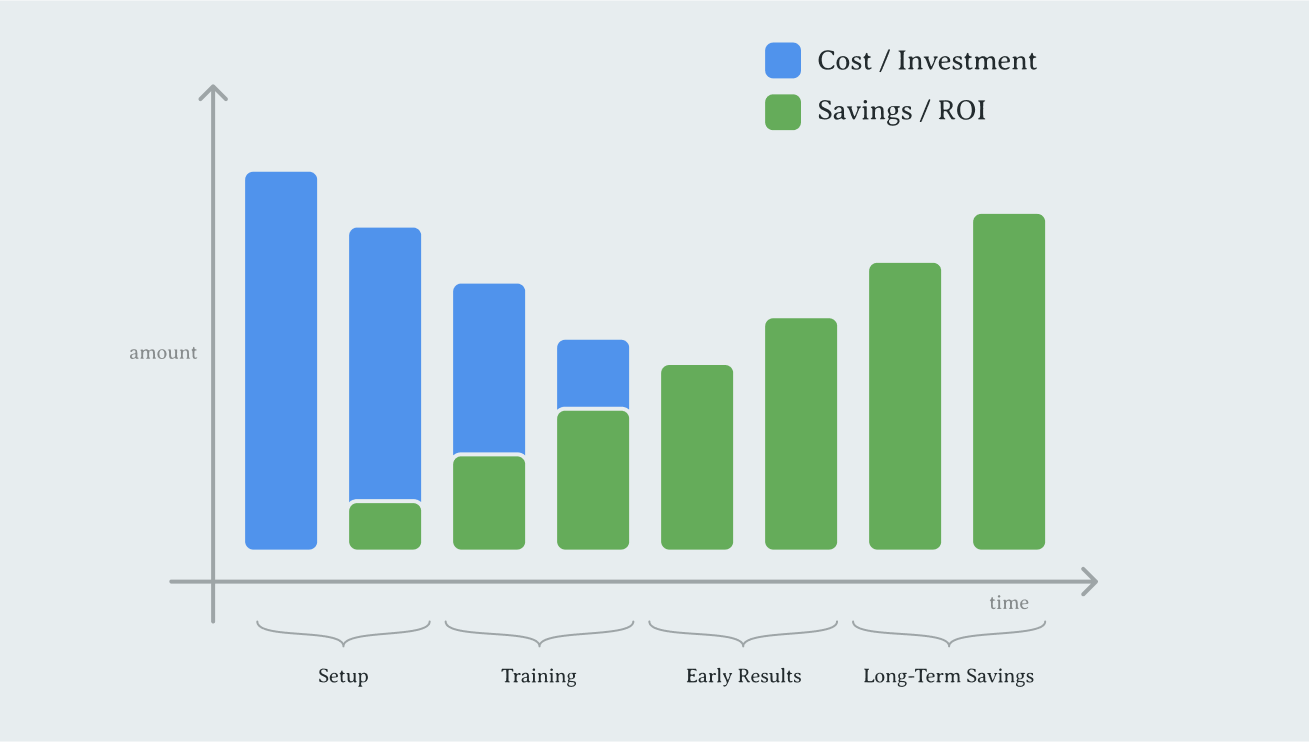

So what's the ROI? And When Do You See It?

The Cost-Benefit Analysis

Investment includes:

Licensing and software setup

Infrastructure expansion

Training and onboarding

Return includes:

Hours saved across testing phases

Decrease in escaped defects

Accelerated release cycles

Higher team productivity

ROI in Action

Let’s say your team saves three days of regression testing per release cycle, with 12 releases per year. That’s 36 days saved. If your average engineer’s cost is $400 per day, you regain $14,400 in productivity annually. Add two prevented production bugs ($5,000 each), and you’re looking at $24,400 saved per year just in tangible cost alone.

When You Will See ROI

Startups: ROI is often achieved quickly, especially in regression-heavy or continuous delivery environments

Enterprises: Typically realize ROI after three to four testing cycles depending on product complexity and release frequency

Over 60% of organizations report measurable ROI from their AI testing investments.

When AI Is an Obvious Win (And When It Is Not)

Use AI Testing If Your Team Is:

Shipping frequent releases in a CI/CD environment

Supporting a wide range of devices and platforms

Lacking bandwidth for extensive manual testing

Maintaining regression-heavy applications

Requiring pixel-perfect UI and UX consistency

Stick With Manual Testing If You Are:

Testing early-stage prototypes or UX concepts

Running short-term or low-risk projects

Validating complex domain-specific logic

Relying heavily on exploratory or creative testing

AI does not replace human testers, it empowers them.

How to Actually Make AI Work for You

Start Small, Then Scale

Begin with a single area such as regression or visual testing. Set performance benchmarks and iterate. Once you validate initial success, expand to more modules.

Upskill Your QA Team

Give your QA engineers the resources to understand and leverage AI tools effectively. This can include formal training, workshops, or working with expert consultants.

Adopt a Hybrid Model

Leverage AI for repetitive, scalable tasks. Use human insight for nuanced, contextual testing.

Tasks AI Handles | Tasks Humans Handle |

Regression suites | Exploratory testing |

Visual comparisons | UX and usability evaluation |

Performance profiling | Business rule validation |

This synergy creates a resilient, intelligent QA system.

What the Future Holds for AI Testing

AI-powered testing is evolving rapidly. Here are the trends to keep an eye on:

Agentic Test Systems: AI bots that independently plan and execute tests based on risk assessments

Advanced Simulation Environments: Improved testing for 5G, foldables, AR/VR, wearables, and spatial computing interfaces

Predictive Quality Platforms: AI that alerts your team about risk areas before code is even merged

Industry forecasts project the global AI software testing market to reach $3.4 billion by 2033, with a compound annual growth rate (CAGR) of 19%.

Final Verdict: Yes, AI in Mobile Testing Is Worth It If Used Strategically

Adopting AI in mobile testing is not a gimmick or luxury. It is fast becoming the standard for engineering teams that prioritize scalability, efficiency, and quality.

Why AI Is a Smart Investment:

Cost savings ranging from 20% to 50%

Reduced time to market and minimized risk

Broader, deeper test coverage across device matrices

Real-time insights and intelligent prioritization

How to Make It Work:

Start with a targeted rollout and expand strategically

Choose tools that align with your tech stack and objectives

Train your team to lead with both human and AI strengths

Embrace a blended model where AI augments, not replaces, your team

If your testing efforts are slowing your releases, missing bugs, or becoming too resource-heavy, AI offers a way out. But the return only comes when you approach implementation thoughtfully.

In a mobile-first world, the teams that learn to leverage AI early will be the ones that lead the future of quality assurance.

The question is no longer if you’ll adopt AI in testing, it is how effectively you’ll harness its potential.